Google announced a virtual try-on for apparel, a new feature that shows users what clothes look like on real models with different body shapes and sizes. The feature includes details such as how the clothing drapes, folds, clings, stretches, and wrinkles. In order to accomplish this, Google’s shopping AI researchers developed a new generative AI model that creates lifelike depictions of clothing on individuals.

The significance of this feature lies in the fact that while apparel is one of the most-searched shopping categories, many online shoppers struggle to envision how clothes will look on them prior to making a purchase. A survey revealed that 42 percent of online shoppers feel that the images of models do not represent them, and 59 percent express dissatisfaction when the item they bought online looks different on them compared to their expectations. Now, Google’s virtual try-on tool on Search allows users to determine if a particular piece of clothing is suitable for them before making a purchase.

The virtual try-on for apparel feature displays how clothes appear on a variety of real models. The process involves Google’s new generative AI model, which takes a single clothing image and accurately portrays how it would drape, fold, cling, stretch, and form wrinkles and shadows on a diverse range of real models in different poses. The selection of models encompasses various sizes, ranging from XXS to 4XL, representing different skin tones (according to the Monk Skin Tone Scale), body shapes, ethnicities, and hair types.

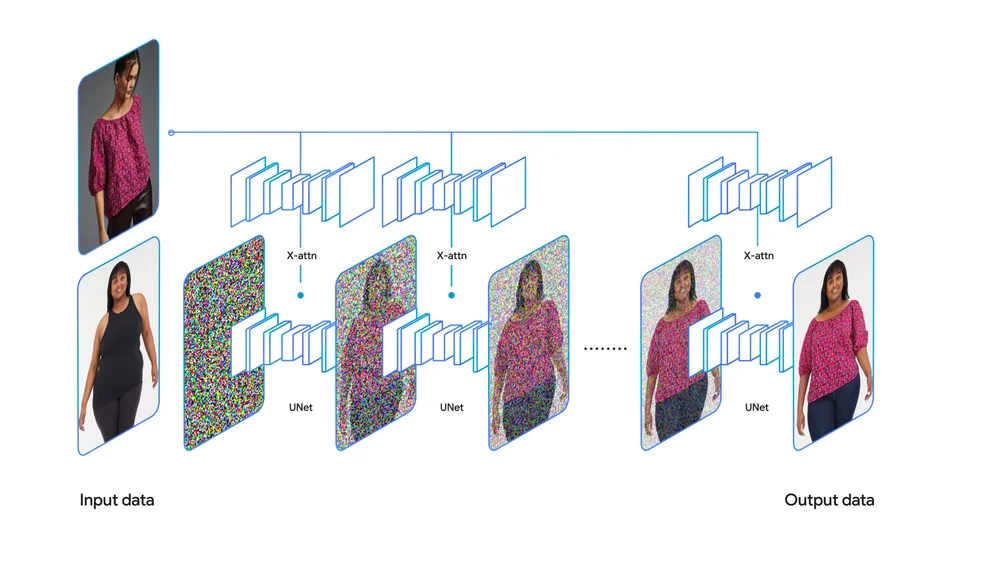

To comprehend the functioning of this model, it is necessary to explain the concept of diffusion. Diffusion involves gradually adding additional pixels or “noise” to an image until it becomes unrecognizable, and then removing the noise entirely until the original image is perfectly reconstructed. Text-to-image models, such as Imagen, employ diffusion in conjunction with text from a large language model (LLM) to generate a realistic image solely based on the entered text.

Inspired by Imagen, Google has taken on the challenge of virtual try-on (VTO) using diffusion with a unique approach. Instead of relying on text input during the diffusion process, Google employs a pair of images—a garment image and a person image. Each image is fed into its own neural network, specifically a U-net, and they communicate with each other through a process called “cross-attention.” This exchange of information between the networks generates the desired output: a photorealistic image depicting the person wearing the garment. This innovative combination of image-based diffusion and cross-attention constitutes Google’s new AI model for virtual try-on.

As of now, shoppers in the United States have the opportunity to virtually try on women’s tops from various brands available on Google, including Anthropologie, Everlane, H&M, and LOFT. By tapping on products marked with the “Try On” badge on Search, users can select the model that resonates most with them.

This technology works in conjunction with the Shopping Graph, an extensive database encompassing products and sellers, allowing it to scale and accommodate more brands and items in the future. Keep an eye out for additional options in the virtual try-on for apparel feature, including the upcoming launch of men’s tops later this year.

To assist shoppers in finding the perfect piece, Google has introduced new guided refinements. Leveraging machine learning and novel visual matching algorithms, users can fine-tune their product search using inputs such as color, style, and pattern. Unlike shopping in a physical store, this feature grants users access to options from various online retailers across the web. The guided refinements can be found within the product listings and are currently available for tops, with the potential for expansion to other categories in the future.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.