Chalmers University of Technology in Sweden is launching a new open dataset called Reeds, in collaboration with the University of Gothenburg, Rise (Research Institutes of Sweden), and the Swedish Maritime Administration.

The dataset provides recorded surroundings of the test vehicle. In order to create the most challenging conditions possible – and thus increase the complexity of the software algorithms – the researchers chose to use a boat, where movements relative to the surroundings are more complex than for vehicles on land. Ola Benderius, Associate Professor at the Department of Mechanics and Maritime Sciences at Chalmers University of Technology, is leading the project.

For self-driving vehicles to work, they need to interpret and understand their surroundings. To achieve this, they use cameras, sensors, radar and other equipment, to ‘see’ their environment. This form of artificial perception allows them to adapt their speed and steering, in a way similar to how human drivers react to changing conditions in their surroundings. The dataset has been developed using an advanced research boat that travels predetermined routes around western Sweden, under different weather and light conditions.

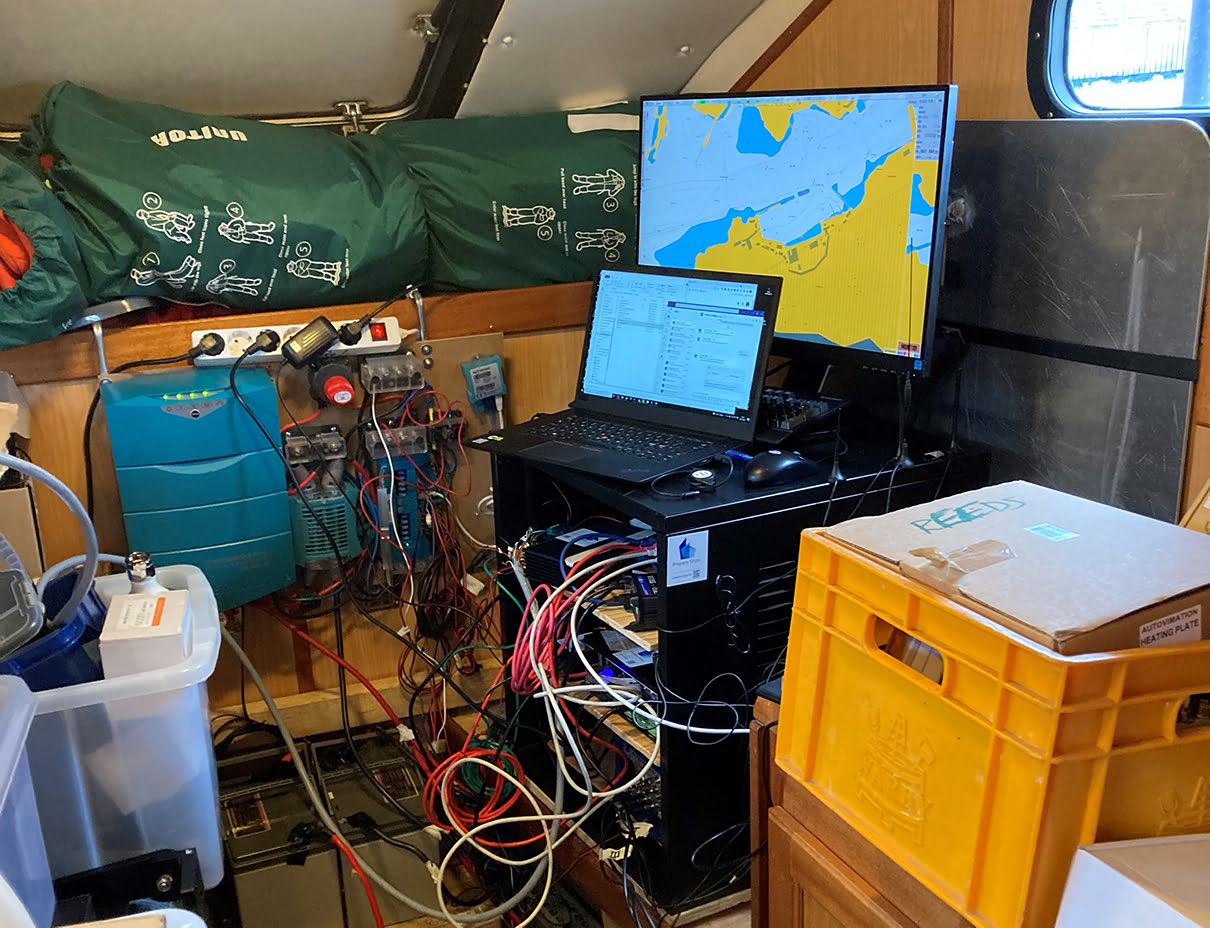

The boat is equipped with cameras, laser scanners, radar, motion sensors and positioning systems. Reeds’ logging platform consists of an instrumented 13 m boat with six high-performance vision sensors, two lidars, a 360° radar, a 360° documentation camera system, and a three-antenna GNSS system as well as a fiber optic gyro IMU used for ground truth measurements. All sensors are calibrated into a single vehicle frame.

The largest data volume is generated by the six high-performance cameras from the Oryx ORX-10G-71S7-series. Each monochrome camera is able to generate up to 0.765 GB/s (3208×2200 maximum resolution in 10-bit depth at 91 fps). Each frame is fed through the Nvidia Quadro card for lossless compression in HEVC format. As a comparison, the second largest producer of data are the lidars, each writing 4,800,000 points per second corresponding to about 75 MB/s. Each of the two servers connects to three cameras, where recordings are written to the 15.36 TB SSD drives. Therefore, the total time that the full logging system can be active is around 76 min. After such logging run, the SSD can then be dumped onto an SATA disk in about 25 h for further post-processing into the cloud service backend. Note that a full trip of data correspond to two such disks, resulting in about 30 TB of data.

In order to offer more fair benchmarking of algorithms, and to avoid moving large amounts of data, the Reeds dataset comes with a evaluation backend running in the cloud. External researchers uploads their algorithms as source code or as binaries, and the evaluation and comparison towards other algorithms is then carried out automatically. The data collection routes were planned for the purpose of supporting stereo vision and depth perception, optic and scene flow, odometry and simultaneous localization and mapping, object classification and tracking, semantic segmentation, and scene and agent prediction. The automated evaluation measures both algorithm accuracy towards annotated data or ground truth positioning, but also per-frame execution time and feasibility towards formal real-time, as standard deviation of per-frame execution time. In addition, each algorithm is also tested on 12 preset combinations of resolutions and frame rates as individually found in other datasets. From this design, Reeds can be viewed as a superset, as it can mimic the data properties other datasets.

During the project, Reeds has been tested and further developed by other researchers at Chalmers. They have worked with automatic recognition and classification of other vessels, measuring their own ship’s movements based on camera data, 3D modeling of the environment and AI-based removal of water droplets from camera lenses.

Reeds also provides the conditions for fair comparisons between different researchers’ software algorithms. The researcher uploads their software to Reeds’ cloud service, where the evaluation of data and comparison with other groups’ software takes place completely automatically. The results of the comparisons are published openly, so anyone can see which researchers around the world have developed the best methods of artificial perception in different areas.

The project began in 2020 and has been run by Chalmers University of Technology in collaboration with the University of Gothenburg, Rise and the Swedish Maritime Administration. The Swedish Transport Administration is funding the project.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.