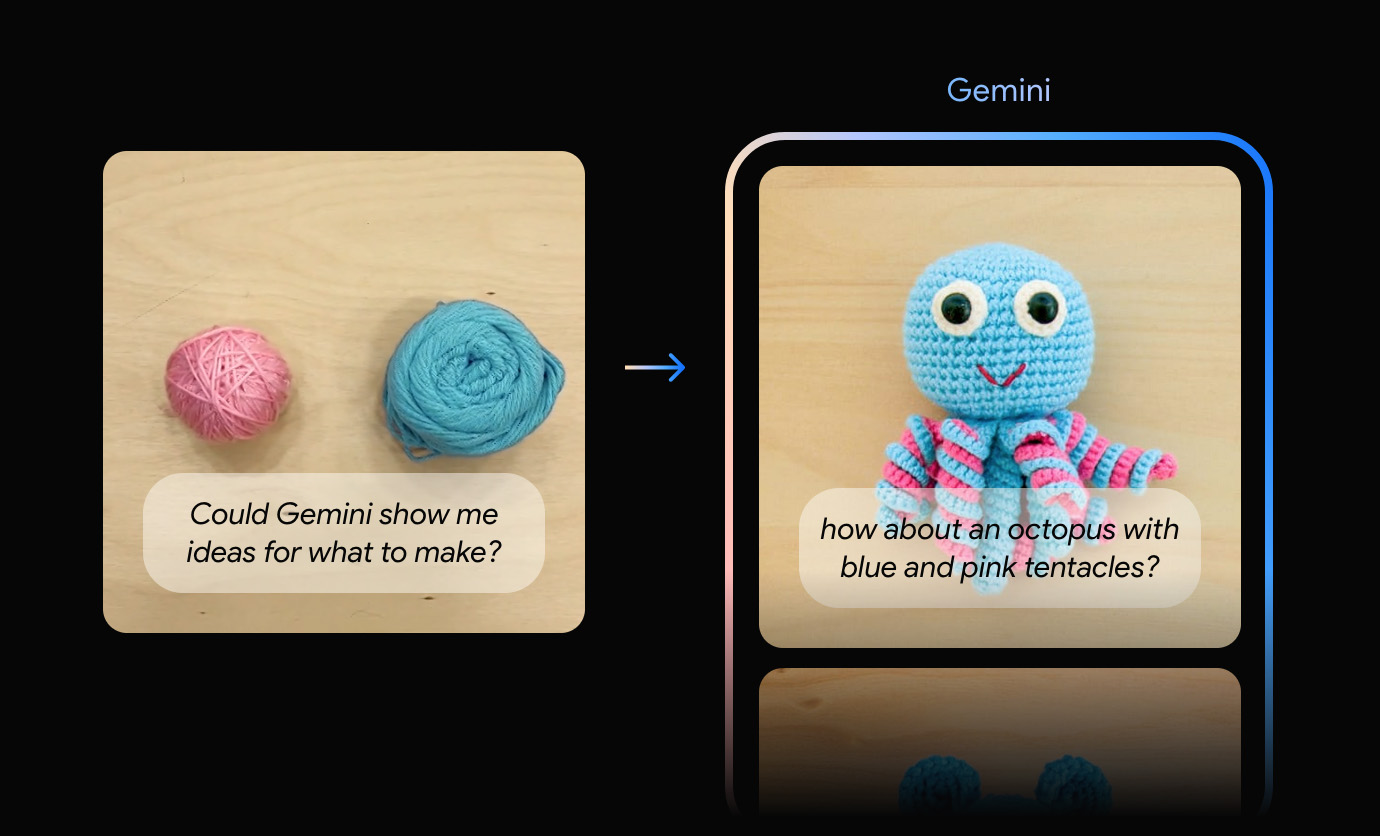

In a groundbreaking announcement, Google has introduced Gemini, a revolutionary generation of AI models inspired by human understanding and interaction with the world. The collaborative efforts of teams across Google, including Google Research, have culminated in the development of Gemini, designed from scratch to seamlessly comprehend and integrate various types of information such as text, code, audio, image, and video.

Gemini, now revealed as Google’s largest and most versatile AI model, boasts unprecedented flexibility, capable of efficient operation across a spectrum of devices, from data centers to mobile platforms. This state-of-the-art model is poised to reshape the landscape for developers and enterprise customers, enhancing their ability to build and scale with AI.

Gemini 1.0 comes in three optimized versions to cater to diverse needs:

– Gemini Ultra: The most advanced model tailored for highly complex tasks.

– Gemini Pro: Optimal for scaling across a wide array of tasks.

– Gemini Nano: The most efficient model designed for on-device tasks.

Rigorous testing of Gemini models across various tasks showcases their prowess. Gemini Ultra, in particular, surpasses current benchmarks, achieving a remarkable 90.0% on the Massive Multitask Language Understanding (MMLU) test, outperforming human experts. Moreover, Gemini Ultra excels in the new Multimodal Multitask Understanding (MMMU) benchmark, achieving a state-of-the-art score of 59.4%.

The Gemini series exhibits remarkable capabilities in image benchmarks, outperforming previous state-of-the-art models without relying on object character recognition (OCR) systems. Gemini’s native multimodality and advanced reasoning abilities shine through, especially in complex subjects like mathematics and physics.

Gemini 1.0’s proficiency extends to understanding and generating high-quality code in popular programming languages, making it a leading foundational model for coding worldwide. Its capabilities in various coding benchmarks, including HumanEval and Natural2Code, position it as a formidable tool for developers.

Google’s AI infrastructure, powered by Tensor Processing Units (TPUs) v4 and v5e, played a pivotal role in training Gemini 1.0. The introduction of Cloud TPU v5p, the most powerful TPU system to date, is expected to accelerate Gemini’s development and facilitate faster training of large-scale generative AI models.

Gemini 1.0 is already rolling out across products and platforms. Notably, Bard, a key application, will leverage a fine-tuned version of Gemini Pro for advanced reasoning and understanding. Additionally, Pixel 8 Pro becomes the first smartphone engineered to run Gemini Nano, introducing new features like Summarize and Smart Reply.

In the coming months, Gemini will be integrated into more Google products and services, including Search, Ads, Chrome, and Duet AI. Early experiments with Gemini in Search have already yielded a 40% reduction in latency in the United States, coupled with improvements in quality.

Developers and enterprise customers can access Gemini Pro via the Gemini API in Google AI Studio or Google Cloud Vertex AI starting December 13. Android developers will also harness the power of Gemini Nano via AICore in Android 14, available on Pixel 8 Pro devices. Moreover, Google plans to launch Bard Advanced, offering cutting-edge AI experiences with access to Gemini Ultra, early next year.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.