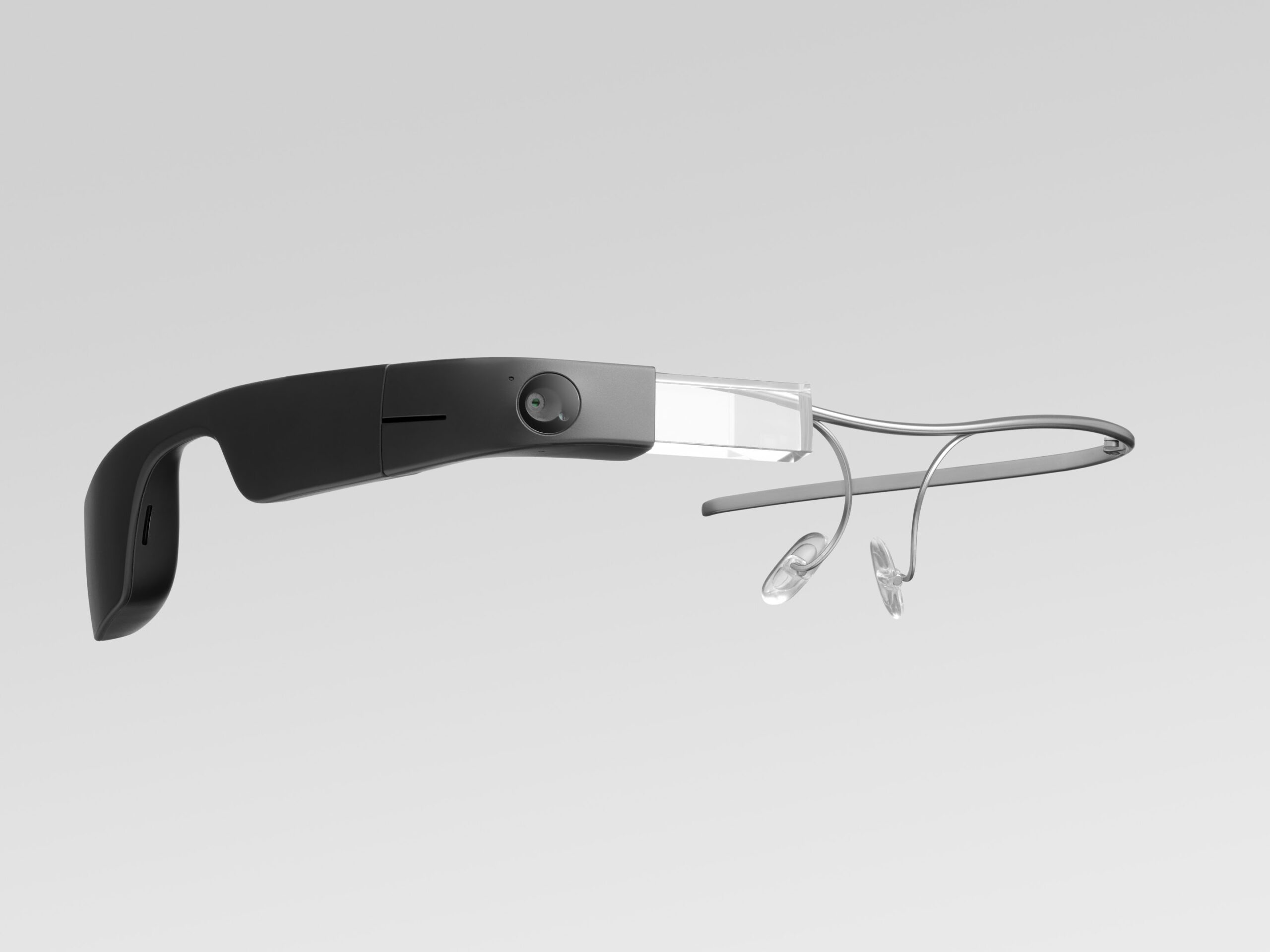

Envision, which was launched in 2021, can recognize faces, objects, colors, and even describe scenes around the user. In addition, it can translate any type of text into over 60 different languages. Today the company announces the launch of Ask Envision, a virtual visual assistant built upon ChatGPT by OpenAI, for all editions of its smartglasses.

The new technology provides a level of utility and context on text scanned by the Envision Glasses, making it easier for users to access information and enjoy greater independence. Ask Envision provides an astonishing level of intelligence to deliver more in-depth information or answer questions about pieces of text scanned by the Envision Glasses. Users can easily scan any text, pose their questions, and answers are spoken out by the glasses in the language of their choice with just one tap within the Scan Text feature.

Ask Envision is now available to all Envision Glasses customers, along with several other key features. These include Ally Video Calling, which enables Envision users to make hands-free video calls to chosen family and friends directly from the smart glasses for personal support and visual interpretation, and Aira Integration, which allows Envision users to call Aira agents, who provide professional human-to-human, visual interpreting 24/7, directly from their Envision Glasses.

Scene Recognition provides the user with a visual interpretation of the scene seen through the glasses, while Facial Recognition enables Envision users to teach their smart glasses to recognize faces, allowing them to tell the user who is in the room or at a location when it recognizes the face.

Envision Glasses also come with Instant Text, Scan Text, and Batch Scan, enabling users to read any type of short text, such as signs and labels, and read aloud more enhanced text, such as letters and documents, with Scan Text.

Layout Detection provides a more realistic reading environment by deciphering the document layout and giving verbal guidance to the user. Envision Glasses also have Enhanced Offline Language Capabilities, recognizing four additional Asian languages accurately when offline, and Bank Note/Currency Recognition, recognizing banknotes in over 100 currencies.

Document Guidance for Accurate Capture removes the frustrations of taking multiple images to fully capture a document’s complete text, and Optimizing Optical Character Recognition has significantly improved image capture and interpretation accuracy through processing tens of millions of data points by the Envision Glasses and Apps.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.